Jokosher is people

Recently I have noticed how a few people seem to think that [Jokosher](https://www.jokosher.org/) is *my* project, and mine alone. I assume the reason for this is that I blog about Jokosher quite a lot, designed much of the GUI, and formed the basis of the project with my posts on the LUGRadio Forums. I want to be utterly clear that Jokosher is *not* just me, and is not just my project. We have a team of people who contribute in many different aspects of the project, and every single one of them is essential for Jokosher to move forward and progress.

My own main contribution is in UI design and direction, managing the community (getting new people on board, managing releases, maintaining the website, sourcing dev resources etc) and I do chunks of coding (mainly audio) here and there. I want to now highlight the many other people involved and their tremendous contributions:

(in alphabetical order)

* Oscar Carlstedt – Oscar created instrument icons.

* Jason Field – Jason wrote much of the original code for Jokosher when it was JonoEdit.

* John Green – John contributes bug fixes, and fixed up chunks of the VU code.

* Edward Hervey – Edward maintains GNonlin, and fixed many bugs us, and ate cheese.

* Tuomas Kuosmanen – Tuomas created our current logo and some dialog box art.

* Stuart Langridge – Stuart wrote the initial Cairo code and the fadelines code.

* Alasdair MacLeod – Alasdair maintained the website at the start of the project.

* Dan Nawara – Dan created art and instrument icons.

* Andreas Nilsson – Andreas created instrument icons.

* Laszlo Pandy – Laszlo has done a huge amount of coding in all areas of Jokosher.

* Jeff Ratliff – Jeff is writing most of the documentation for Jokosher at the moment.

* Gregory Sheeran – Greg created our very first logo.

* Michael Sheldon – Michael has coded in many different parts and also hacks on the GStreamer channel splitting element.

* Ben Thorp – Ben contributed much of the event related code.

So there we have it, Jokosher is more than just me, 14 more people than just me, and the community is always growing. My primary interest in Jokosher is from a design, direction and UI side, and I also like to write code when I get a chance, but without this band of merry men, Jokosher would not be what it is today, and I want to ensure that each of them is credited in the Jokosher story every step of the way. You guys rock! 🙂

Thinking about GNOME 3.0

As many of you will know, I am quite the usability pervert. Understanding how people use computers and creating better and more intuitive interfaces fires me up, and the mere idea of GNOME 3.0 is interesting to me. The reason why I find GNOME 3.0 exciting is that it presents a dream for us; we are not entirely sure where we are going, but we know it needs to be different, intuitive and better for our users.

While at GUADEC I sat down for a while with MacSlow, and he gave me a demo of [Lowfat](https://macslow.thepimp.net/?page_id=18). For quite some time I have had some vague ideas for the approximate direction of GNOME 3.0, and some of Mirco’s work triggered some of these latent concepts and mental scribblings. I am still not 100% sure where I would like to see GNOME 3.0 go, but some of the fundamental concepts are solidifying, and with my recent addition to the mighty Planet GNOME, I figured I should share some ideas and hopefully cause some useful discussion. I am going to wander through a few different concepts and discuss how we should make use of them in GNOME 3.0, culminating in some ideas and food for thought.

## A more organic desktop

One of the problems with the current GNOME is that it largely ignores true spatial interaction. Sure, we have spatial nautilus (which is switched off in many distros), but spatial interaction goes much further. If you look at many desktop users across all platforms, the actual desktop itself serves as a ground for immediate thinking and ad-hoc planning in many different ways:

* Immediate Document Handling – the desktop acts as an initial ground for dealing with documents. Items are downloaded to the desktop and poked at before entering more important parts of the computer such as the all-important organised filesystem.

* Grouping – users use the desktop to group related things together. This is pure and simple *pile theory*. The idea is that people naturally group related things together into piles. Look at your desk right now – I bet you have things grouped together in related piles and collections. We don’t maximise this natural human construct on our desktop. More on this later.

* Deferred Choices – the desktop serves as a means to defer choices. This is when the user does not want to immediately tend to a task or needs to attend to the task later. An example of this is if you need to remember to take a DVD to work the next day – you typically leave it next to the front door or with your car keys. The analogy with the current desktop is that you would set an alarm in a special reminder tool. More people set ad-hoc reminders than alarms.

* Midstream Changes – it is common for users to begin doing a task, and then get distracted or start doing something else. An example of this is if you start making a website. You may make the initial design, and then need to create graphics and get distracted playing with different things in Inkscape. The desktop often acts as a midstream dumping ground for these things. Work in progress in documents are often placed on the desktop, and this acts as a reminder to pick it up later (see *deferred choices* earlier).

It is evident that the desktop is an area that provides a lot of utility, and this utility maps to organic human interaction – collecting things together, making piles, creating collections, setting informal reminders, grouping related things. These are all operations on *things*, and are the same kind of operations we do in our normal lives.

Part of the problem with our current desktop is that there is a dividing line between things on the desktop and things elsewhere. It is a mixed maze of meta-data, and inter-connected entities that should be part of the desktop itself. As an example, when I was writing my book, I created word processor documents, kept notes about the book in TomBoy, saved bookmarks in Firefox and kept communications in GMail and Gaim. The singular effort of writing a book involved each of these disparate unconnected resources storing different elements of my project. I would instead like to see these things much more integrated into (a) contextual projects, and (b) manageable at a desktop level. More on contextual projects later.

## Blurring the line between files, functions and applications

The problem we have right now is that the desktop is just not as integrated as it could be. If you want to manage files, you do it in a file manager, if you want to do something with those files you do it in an application, if you want to collect together files into a unit, you use an archive manager. Much of this can be done on the desktop itself, but we need to identify use cases and approach the problem from a document-type level.

Let me give you an example. A common type of media are pictures, photographs and other images. The different things you may want to do with those images include:

* Open them

* Edit them

* Compare them

* Collect them together by some form of relevance (such as photos from a trip, or pictures of mum and dad)

* Search for them

These tasks involve a combination of file management, photo editing applications, photo viewing applications and desktop search. Imagine this use case instead:

I want to look through my photos. To do this I jump to the ‘photo collection’ part of my desktop (no directories) and my collection has different piles of photos. I can then double-click on a pile and open up in front of me. Each photo can be picked up and moved around in a physically similar way to a normal desk (this is inspired from MacSlow’s LowFat). I can also spin photos over and write notes and details on the back of them. Using my photos I can put two side by side and increase the size to compare them, or select a number of photos and make them into a pile. This pile can then be transported around as a unit, and maybe flicked through like a photo album. All of this functionality is occurring at the desktop level – I never double-click a photo to load it into a photo viewer, I just make the photo bigger to look at it. All of the manipulation and addition of meta-data (by writing on the back of the photo) is within the realm of real world object manipulation, and obeying pile theory and spatial orientation.

The point here is that the objects on the desktop (which are currently thought of as icons in today’s desktop) are actual real world objects that can be interacted with in a way that is relevant to their type. In the above use case you can make the items bigger to view them, compare them side by side, and scribble notes on them. These are unique to certain documents and not others. You would not zoom, compare and scribble notes on audio for example, but you would certainly use pile theory on audio to collect related audio together (such as albums).

## Applications

So, if we are trying to keep interaction with objects at the desktop level, how exactly do we edit them and create new content? How do today’s applications fit into this picture? Well, let me explain…

The problem with many applications is that they provide an unorganised collections of modal tools that are not related to context in any way. I have been thinking about this a lot recently with regards to [Jokosher](https://www.jokosher.org/), and this was discussed in my talk at GUADEC. Take for example, Steinberg’s Cubase:

In Cubase, if you want to perform an operation, you need to enable a tool, perform the operation, disable the tool and then do something else. There is a lot of tool switching going on, and toolbar icons are always displayed, often in situations when that tool can either not be used or just would not make sense to be used. The problem is that it obeys the philosophy of *always show lots of tools onscreen as it makes the app look more professional*. Sure, it may look professional, but it has a detrimental impact on usability.

I believe that tools should only ever be displayed when pertinent to the context. As an example, in Jokosher we have a bunch of waveforms:

The first point here is that we don’t display the typical waveforms you see in other applications. Waveforms are usually used to indicate the level in a piece of audio, and as such, we figured that musicians just want to see essentially a line graph, instead of the spiky waveform in most applications. This immediately cuts down the amount of irrelevant information on screen. Now, if you select a portion of the wave in Jokosher, a tray drops down:

(well, at the moment, it drops down, but does not visually look like a tray, so run with me on this for a bit!)

Inside this tray are buttons for tools that are relevant to *that specific selection*. Here we are only ever displaying the tools that are pertinent to the context, and this has a few different benefits:

* We don’t bombard our users with endless toolbars

* Tools are always contextual, which makes the interface more discoverable and intuitive

* We restrict the potential of error by restricting the number of tools available for a given context

* There are fewer buttons to accidentally click on, and this lowers the hamfistability of our desktop 😛

Now, take this theory of contextual tools, and apply it at the desktop level. Using our example from earlier with photos, I would like to see contextual tools appear when you view a photo at a particular size. So, as an example, if I have my collection of photos and I increase the size of a photo to look at it, I would like to see some context toolbars float up when I hover my mouse over the photo to allow me to make selections. When I have made a selection I should then see more tools appear. There are two important points here:

* Firstly, you don’t load the photo into an application. As you view the photo at the desktop level, the functionality associated with an editing application seamlessly appears as contextual tools. This banishes the concept of applications. Instead, you deal with things, and interact with those things immediately.

* Secondly, tools are always contextual, and relevant to the media type. For a photo and a document, selections make sense, for an audio file, you should be able to apply effects and adjust the volume, for a text editor you should be able to change font properties. Everything is relevant to the context.

## The contextual desktop at a project level

To really make the project feel contextual, we need to be able to make it sensitive to projects and tasks. At the moment, tasks and sub-tasks are dealt with on a per-task basis as opposed to being part of a bigger, grander picture. Let me give you a use case with today’s desktop:

I am working on a project with a client to build a website for him. I decide I want to send some emails to him, so I fire up my email client and dig through my inbox to find the mail he sent me yesterday. I then reply to him. As I work, I realise that I need to speak to him urgently, so I log on to IM. Within my buddy list I see that he is there, so I have a chat with him. While chatting, my friend pops up to discuss the most recent Overkill album. As I am working, I don’t really want to talk about the album right now, so I either ignore him or make my excuses. After finishing the discussion with the client, I load up Firefox and hunt through my bookmarks to find a relevant page and start merging the content into the customers site. To do this I load up Bluefish and look through the filesystem to find my work files and begin the job.

The problem here is that the relevant work is buried deep in other irrelevant items. To make matters worse, some resources such as IM can just prove too distracting, and may never get used (remember *midstream changes* earlier). As such, the really valuable medium of IM is never used for fear of distraction. Now imagine the use case as this:

I am working on a project with a client to build a website for him. I find my collection of projects and enable it, and my entire desktop switches context to that specific project. Irrelevant applications such as games are hidden, and relevant resources are prioritised. When I communicate with the client, only emails and buddies relevant to that project are displayed. When I want to find resources (such as documents) to work on, only those documents that are part of the project are displayed. The entire desktop switches to become aligned to my current working project. This makes me less distracted, more focused and there is less clutter to trip over.

I actually had this idea a while back, and wrote [an article describing it in more detail](https://www.oreillynet.com/onlamp/blog/2005/04/remixing_how_we_use_the_open_s.html). Feel free to have a read of it.

## Conclusion

The point of this blog post is not to sell you these concepts, but to identify some better ways of working which are more intuitive and more discoverable. Importantly, we need to make our desktop feel familiar. This was a point Jef Raskin made as part of his work, and I agree. Some people have been proposing some pretty wacky ideas for GNOME 3.0, but grandiose UI statements mean nothing unless they feel familiar and intuitive. What I am proposing is an implementation of real world context, relevance and physics into our desktop. This will make it more intuitive, less cluttered, less distracting and a better user experience.

I really want to encourage some genuine discussion around this, so feel free to add comments to this blog post, or reply via Planet GNOME. Have fun!

Guess what?

If you are going, get yours!

Microsoft supports OpenDocument

Well, after years of pushing the interoperability line, Microsoft do seem to be actually entertaining the concept of working with others with the announcement that Microsoft Office 2007 with support OpenDocument Format. I am sure the news sites will be crawling with this any time now, but what does it actually mean for us? Is this a win for Open Standards or just a PR play?

Well, I am not entirely sure. I think there are two core questions here:

* How far will Microsoft extend its concept of interoperability?

* How does ODF actually fit into Microsoft Office?

For the former, I am fairly cynical of Microsoft’s definition of interoperability. This is not without good reason – I have been invited to many Microsoft events designed to solicit feedback and opinion (Microsoft Technical Summit in Redmond, Open Source discussion session at Reading, various press sessions and discussion events). The problem with Microsoft’s position is that they largely approach interoperability as a one-way mirror; it is a means to interact one way, but not employ the same principles the other way. This is because two-way interoperability involves a loosening of control. Microsoft seem define interoperability as ‘making things work with specific vendors’. At a recent discussion session at Microsoft UK in Reading, Nick McGrath displayed a slide with Windows in the middle and a series of other products around it in a circle. The slide inferred that Microsoft’s interoperability is defined through agreements, discussions and mutual business relationships with these vendors. Well, it just doesn’t work that way. Interoperability is about allowing the flow of information whether it fits your business plan or not.

For the second issue we need to look at how Microsoft will *actually* support ODF in Microsoft Office 2007. Much of this chatter about Microsoft and ODF is based around the Open XML Translator project. The press release says “in essence, Microsoft will fund and provide architectural guidance to three partners to build the Open XML Translator, a set of tools being developed as open source that enable translation of Office Open XML and ODF documents”. It also says, “as part of this announcement, Microsoft is also announcing the creation of an Interoperability Center for Office 2007 – an add-on that adds itself to the file menu in Office 2007, enabling .PDF, .XLS and .ODF interoperability”. Microsoft have been open that this ODF support is driven by government demand.

Although this looks promising, a colleague informed me that little, if any code actually exists to do this right now and it is all purely roadmap huzzah, although an early release should be ready soon. It is also interesting to note that this translation project may be potentially buildable on free software, and that brings a whole raft of potential, although naturally licensing issues will need to be determined. If Microsoft are really committed to interoperability, this translation project should allow the use of Microsoft file formats in OpenOffice.org, Abiword, KOffice and other tools. This is the true test of their word.

Of course, all of this means absolutely nothing without proper education. Sure, people like us get all fired up and excited about file formats, but the regular Joe and Josephine on the street don’t. It is extremely unlikely that the ODF file format will be on by default or even be that discoverable from a usability angle, so we need to educate people about why they should use ODF and what its benefits are. This all boils down to advocacy and the same core concepts that I bang on about in my talks – punters need a *reason* why they should use ODF, and a reason that is not just based on ethics. There needs to be a core functional reason why ODF makes sense within their context and contextual links. Sure, in government the context demands interoperability and transparency of information transport, but for home users its not the same. The various pro-ODF projects need to think about this carefully. I would love to see something like the Creative Commons for ODF – really simple, attractive information about why you should care.

While writing this I just had a call from a few contacts queueing up some conference calls and interviews. I will keep you posted.

New site, Planet GNOME and LUGRadio Live

Firstly, after my custom site punched me in the face one too many times, I have moved to WordPress. My old site was becoming a little too unwieldy, and the benefits of moving to WordPress outweighed the disadvantages. So, check out [archivedblog.jonobacon.com](https://archivedblog.jonobacon.com/) and its snazzy new design. 😛

Secondly, hello [Planet GNOME](https://planet.gnome.org/)!

Finally, [LUGRadio Live 2006](https://www.lugradio.org/live/2006/) is in a few weeks, and the excitement is hotting up. It was mentioned on [El Reg](https://www.regdeveloper.co.uk/2006/07/04/lugradio_returns/) and more and more people are blogging about it and getting all hot under the collar. Two days, over 40 talks, over 15 exhibitors and entirely driven by the community, and all for a fiver to boot. Its on the 22nd and 23rd July 2006 in Wolverhampton, England, and will be a blast!

770 shazaa and hog skinnery

The majestic Michael ‘Elleo’ Sheldon has been hacking on some interesting [Jokosher](https://www.jokosher.org/) stuff recently. Firstly, he has been hacking on GStreamer to make high end sound cards such as the Delta 44 and Delta 1010 work properly in GStreamer. This involved a [bug report](https://bugzilla.gnome.org/show_bug.cgi?id=346326) and then a patch that fixed the `alsasrc` to make it happen. This means that Jokosher will be ‘the real shizzle’ for sound cards with more inputs than Matthew Garrett has laptops.

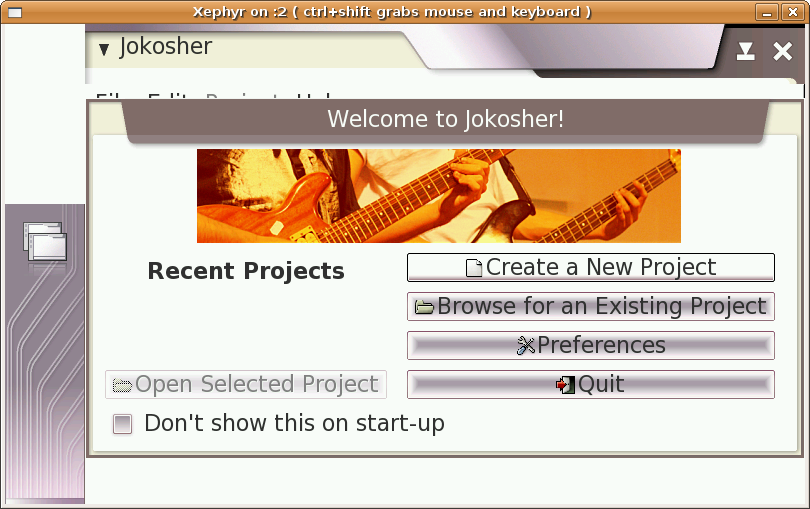

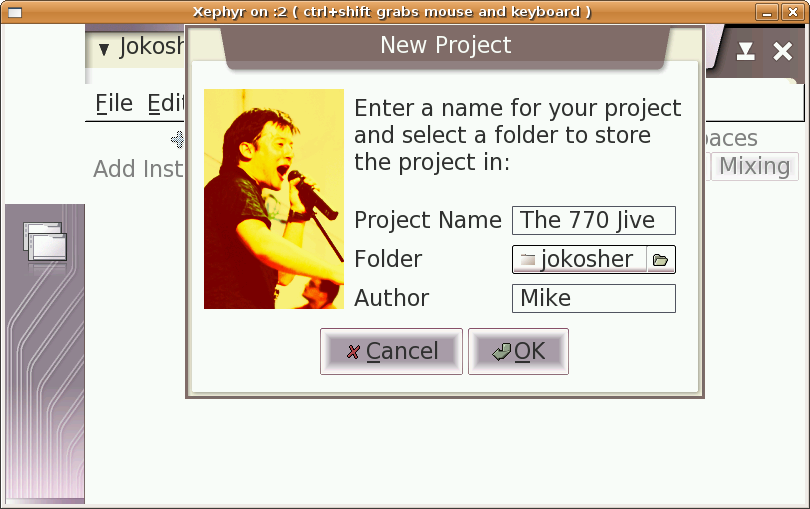

The wistful lad has also [blogged](https://blog.mikeasoft.com/2006/07/03/jokosher-on-the-nokia-770/) about some hacking he has done to make Jokosher run in the Nokia 770 emulator. I had a quick chat with him last night and he said that it was not all that difficult to get running, although it is by no means finished yet. Aside from [Jokosher Remote](https://archivedblog.jonobacon.com/viewcomments.php?id=713), we also have Jokosher Mini for the Nokia 770 planned, and this is encouraging news. A few shots of Elleo’s work for the screenshot junkies among you:

## Everybody hates Ted Haegeaeaeaear

[Including me](https://reverendted.wordpress.com/2006/07/03/everyone-hates-jono-bacon/).

Every so often you meet someone who confounds popular understanding of reason. A person who challenges even the most sane of individuals with his references to hog skinnery and a keenness to mock [Erin Bockovich](https://abock.org/).

In addition to such crimes against reason, he denies his part in the grand-bacon-conspiracy (to which he has worked with Messrs Bockovich, Cooper and Nocera), and has also participated in the act of senselessly launching non-descript chunks of fruit at salad dodging GUADEC participants with orange hair. Is there no limit to the depths this man will stoop to?

Say NO to Haegeaear!

## WordPress comes riding to the rescue

Oh, and for those of you who have had problems posting comments, I am in the process of moving to WordPress. My custom blogging engine has frustrated me one too many times. Hopefully I can get something up and running soon and then you can go comment nuts. 🙂

Designing Jokosher Remote

A while back I got a Nokia 770 from work. I was quite looking forward to getting my hands on one, and it turned out to be a pretty nice little device. Obviously, it supports the full on GTK/GStreamer jazz, and my mind started rolling with ideas for applications. One such application was Jokosher Remote.

As a multi-tracker, Jokosher (like any other) has a physical limitation. Musicians tend to be crammed in tiny home studios constructed from spare bedrooms, and fashioned with a combination of guitar amps, computers, books, spare bedding and beer cans. These creative domains are often restrictive, covered in trailing cables and typically hot. In other words, no matter how nice we make Jokosher, the room it is sat in may very well be restrictive and uncomfortable.

Another problem with the home studio is that the computer can be physically too far away from the ideal recording scenario. There are two examples of this:

* The computer is situated away from the instrument. An example of this is if you have a drum kit in the corner of the room and need to keep getting up to hit record on the computer, run to the drums and play the part. This constant getting up and pressure to get back to the kit on time almost always results in mistakes when recording.

* You need to record an instrument in another room due to sound separation. In this case, you may want to record a Cello in a separate room to ensure that there is no overspill from other instruments. The problem is when you need to control the computer – again, you need to hit record and run to the recording area and play.

In other words, unless your instrument is next to the computer, life can get tough.

The solution to this problem is a remote control, and this is where the Nokia 770 comes in with Jokosher Remote.

## Feature Requirements

The requirements for Jokosher Remote are fairly simple. Jokosher Remote would not record audio on the Nokia 770 itself, but instead control the transport for Jokosher running on the main computer and record to the main computer as per normal.

The core feature requirements are:

* Provide transport control for playing, recording, seeking and stopping.

* Be able to arm instruments for recording. This is important for Jokosher Remote as you may want to record on different instrument tracks for the same physical instrument. As an example, you may want to record a number of different guitar parts on different tracks.

* Be able to set up and record overdubs. Overdubs involve recording over a specific part in a project, and are typically used when you screw something up when recording. Jokosher Remote should allow you to configure these overdub points. An example of this is overdubbing a particularly different part in a solo.

* Provide visual feedback. Remote control devices are always susceptible to problems and errors, and we need to provide our users with solid feedback that recording is working correctly and that the connection has not dropped at all.

* Provide nice big buttons that don’t require the 770 pen. The interface should be controllable by fingers.

* Be simple, just like Jokosher. The whole point of Jokosher is to make recording easy, and Jokosher Remote should instill that ethos.

I spent some time chatting with Aq about the UI while at GUADEC and we had some ideas for how things should work. I then sat down on the train while coming back from Brighton to solidify some of these ideas, and have created a bunch of mock-ups to explain how it should work. As ever, these designs are malleable, but I think they form a solid foundation on which the interface can work.

> Another thing I was thinking was that additional features such as a guitar tuner could be merged into the application, but these would need to be separate projects that plug into Jokosher Remote. Is there a guitar tuner for the 770 already? Answers on a postcard…

## UI Design

When the user turns on Jokosher Remote, it will use Avahi to find Jokosher applications on the network. The user can then just select which application to use (they will likely only have one application). This prevents the user having to configure networking settings. We may need to engineer in some kind of confirmation to avoid anyone with a 770 just controlling any-old Jokosher computer. My initial hunch is that when the user selects a connection on the 770, the main Jokosher computer will ask for confirmation of the connection. We can flesh this out later.

So, lets first look at the main screen:

This is the main screen and this is where the recording occurs. Just like in Jokosher, we have the transport controls along the top, and two Workspace buttons – this uniformed UI ensures that Jokosher Remote is instantly usable. Inspired by the work of Jeff Raskin, user interfaces should be ‘familiar’, and this is prevalent here.

This screen is the Recording workspace. To record a part, just tap the record button and begin playing. Playback will also display a playhead, just like in the real Jokosher. The play and seek buttons can also be used to skip to other parts, and the timeline can be dragged to find specific parts too. As the user records, the wave will appear (this is also planned in main Jokosher for 0.2). This provides important feedback that what you are playing is (a) being recorded, (b) the connection between Jokosher Remote and Jokosher is working, and (c) you can monitor the approximate volume of the recording – if the wave is solid, you are way too loud.

> NOTE: As in real Jokosher, the status bar is used extensively to indicate instructions. This is available on both workspaces. Additional instructions are available with labels.

To set up a recording, the user taps the Setup workspace button. They then see this:

Here each of the instruments in the project are displayed as toggle buttons. Each button has the icon and name of the instrument on it. To arm an instrument for recording, the user just toggles the button. They can now go back to the Recording workspace and record. Each armed instrument appear as a track in the Recording workspace. This again (a) provides feedback that recording is working, and (b) provides feedback that toggled instruments are actually recording.

> NOTE: Within the Setup workspace the transport buttons are present. I am only 80% sure they are required as they may be useful to audition the project to double check which instruments should be armed. Thoughts are welcome on this.

Aside from transport control and instrument arming, configuring and recording overdubs is essential. In many music applications, overdubs involve setting markers and demand lots of mousing and clicking. The problem of this approach, apart from increased input requirements, is that overdubs are then bound to the concept of time. In reality, no musician cares about the times of an overdub, he or she just knows when to start and finish it.

To create an overdub, the user clicks the Create Overdub on the screen above. A dialog then pops up:

When creating an overdub, the user essential wants to seek to the part which needs the overdub and select the start and end point. As such, the user can use the play and seek buttons to seek and then when they get to the area where it starts, they click the START Overdub to register this is where the overdub starts. The dialog then changes:

As the music continues to play, the user can then hit the END Overdub button to mark the end of that overdub section. Hitting this button will then close the dialog and return to the main screen, but the overdub selection is highlighted:

When the user now presses the record button, the overdub area will be looped. It will keep looping until the users nail the part and hits the Accept Overdub button. At that point the overdub is kept and the screen returns to the normal main screen:

One additional point to make is that it may be useful to automatically prepend 10 seconds of audio before the start overdub point. This gives the user enough time to press record and prepare to play the part.

## Making it happen

So there we have it, that is the approximate design for Jokosher Remote. Now, is this gonna happen, or is it just a wild fantasy?

Well, after some discussion at GUADEC, Nokia offered us a Nokia 770 to build this application. Aq has expressed an interest in working on this, so he has got it to start work on it. As such, you can expect Jokosher Remote to be released someday hopefully soon. Development of Jokosher Remote will occur alongside mainline Jokosher development. I have a 770 too and will contribute to the project in UI and audio related things.

As ever, volunteers are always needed, so drop me a mail if you can help out. Lets make this thing live and make those stuffy studios across the world a little bit nicer to work in.

Man is Jokosher gonna rock the world…

GUADEC Day 2

Yesterday was another great day at GUADEC. One of the highlights for me was the GStreamer talk given by Wim and Andy. In the talk they discussed how some of the threading works in GStreamer and went onto discuss how clocks work. This could not have been better timed, as I have been looking into clocks to ensure Jokosher is properly syncing. After the talk I sat down with Thomas Vander Stichele for an hour and we had a chat about GStreamer and how to attract more people to it. Like the other Fluendo guys, Thomas is a really down to earth guy, and it was good to finally sit down and have a chat with him. I am convinced that people just don’t know all the cool stuff you can do with GStreamer. As an example, in the talk yesterday, everyone downloaded a 30-40 line Python script that viewed a streamed video and synced it with each client. This resulted in a stack of laptops playing a video in sync over the network – videowall stylee awesomeness. Not bad for a short Python script.

GStreamer was also evident as the backbone of some of the coolest projects on show this year at GUADEC. Aside from [Jokosher](https://www.jokosher.org/), we saw three interesting projects in the lightning talks session – PiTiVi, Diva and Elisa. I had seen PiTiVi and Diva before, but Elisa was new. Elisa is a set-top box written in Python and using GStreamer and SDL. It is sleek, attractive and pretty well designed, although some chunks of the interface need re-scaling a little. Nontheless, they are making an awesome product and have a pretty cool community there.

Other stuff:

* Aaron Bockover, author of Banshee was cajoled by Ted Haeger and Paul Cooper to [specifically exclude](https://cvs.gnome.org/viewcvs/banshee/ChangeLog?rev=1.509&view=markup) the fantastic *Jono’s World Of Metal* music collection (exported with DAAP). Bastard. Listen to metal, its good for you.

* J5, Edward Hervey, Davyd Madeley and some other guys formed a band and played to the GUADEC faithful. It was good fun. There was then a jam session and I chipped in with some bass playing. Good fun.

* Miguel de Icaza tried to persuade me to use IronPython for Jokosher. Interesting.

* Brother Ted had his suitcase arrive three days later. Poor blighter. His case has arrived now and he welcomed a change in underwear. He was also nice enough to let us use his shower as our cabin has no hot water.

* Last night we had some drinks. It was good to meet JP from Novell, and I finally got to meet Daniel Stone. I have known Daniel for years, but never got to meet him. Funny guy. It was also good to hook up with Bastien and Matt Garrett. Bastien was naturally a smug bastard after the football.

No time to upload photos now – more coming later! See [here](https://archivedblog.jonobacon.com/gallery/main.php?g2_view=core.ShowItem&g2_itemId=3535) for the GUADEC 2006 photo album!

Keep your eyes on [lugradio.org](https://www.lugradio.org/) for GUADEC episodes!!

GUADEC Day 1

Well, here we are in sunny spain, and GUADEC is, as ever, awesome. Last year was great, and this year is even better. These guys know the definition of *community*.

I don’t have the time to write a huge amount about the first day, so I will summarise:

* Alex Graveley’s Gimmie talk was interesting, and Gimmie is looking like a ‘right corker’ for GNOME 3.0. Alex is doing things the right way – thinking of a concept and hacking up something to show and tell. I was also impressed with just how much he has thought it through. Good job.

* It was good to meet up with Robert Love, Joe Shaw and Larry Ewing. I was pleased to see the [Robert Love hate campaign](https://jonobacon.com/viewcomments.php?id=706) was taken in good spirits. I half expected him to lamp me with a copy of Linux In a Nutshell. 😛

* Managed to hook up with the Fluendo guys, and really enjoyed catching up with Edward Hervey. I am sat in the lightning talks right now about to watch his [PiTiVi](https://www.pitivi.org/) talk. PiTiVi is awesome, and more people should get involved in it. If you like video and want to change the world, get in touch with him.

My talk was yesterday and it seemed to go pretty well. The talk was pretty well attended, and there were plenty of questions. I spoke for about 45 minutes about the background behind Jokosher and its direction, and then moved on to give a demo. There was a really positive response to the demo, and the audience even gave a round of applause when they saw it working – credit rightly deserved for the awesome Jokosher community. Not bad for three or four months of hacking.

Jokosher is exciting, and we have the opportunity to make some amazing things happen. I spent some time nattering to J5 after the talk about some of the collaborative possibilities, and the sky is the limit. Lets rock the music world with Jokosher and the GNOME desktop.

## The gallery

Now, sit back, switch on the music from [Tony Hart’s]() gallery, and enjoy some GUADEC photography:

*The Internet’s Mirco ‘MacSlow’ Muller*

*The man, the legend, Edward ‘bilboed’ Hervey *

—–

For those about to hack, we salute you

Here we at GUADEC, and it is incredibly hot. We got here last night after a torturous journey, but we settled in and had a few beers last night. Good to meet up with old and new friends, and we are having a ball so far.

Today at 4pm is my talk. If you are reading this at GUADEC, go there. It will be good for you.