Recently I have been talking a little about [building quality and precision into Ubuntu Accomplishments](https://archivedblog.jonobacon.com/2012/05/14/precision-and-reliability-in-ubuntu-accomplishments/). Tonight I put one of the final missing pieces in place and I thought I would share in a little more detail about some of this work. Some of you might find this useful in your own projects.

Before I get started though, I just wanted to [encourage you to start playing our software](https://wiki.ubuntu.com/Accomplishments/Installing) and for those of you that had a crash when using certain languages with the *Accomplishments Information* viewer, I released a `0.1.2` update earlier that fixes this.

## Automated Testing

As we continue to grow the [Ubuntu Community Accomplishments](https://launchpad.net/ubuntu-community-accomplishments) collection it is going to be more and more complex to ensure all of the accomplishments are working effectively every day; we are already at 28 accomplishments and growing! What’s more, the community accomplishments scripts work by checking third-party services for data (e.g. Launchpad) to assess if you have accomplished something. These external services may change their APIs, adjust how they work, add/reduce services etc, so we need to know right away when one of our accomplishments no longer works and needs updating.

To do this I wrote a tool called *battery*. It works by reading in a test that is available for each accomplishment that feeds the accomplishment validation data that should succeed and also data that should not validate. As an example, for the *Ubuntu Member* accomplishment the data that succeeds is an existing member’s email address (such as my own) and the test for failure is an email address on Launchpad that is not a member. The original script requires the user’s email address to assess this accomplishment, so *battery* tests simply require the same types of information, with data that can trigger success and failure.

This approach allows us to test for three outcomes:

* That the valid email address returns exit code `0` (the script ran successfully and the user is verified as being an Ubuntu Member).

* That the invalid email address returns exit code `1` (the script ran successfully but the user is not an Ubuntu Member).

* If the script has an internal issues and returns exit code `2`.

The way this works is that battery includes a customized version of the general `accomplishments.daemon` module that we use for the backend service. In the code I override the back-end module and load a custom module. This way the original accomplishment script does not need to be modified; instead of `get_extra_information()` calling the back-end daemon and gathering the user’s details, the custom module that comes with *battery* instead has it’s own `get_extra_information()` that gets returns the test data so *battery* can run the tests.

Originally *battery* only output textual results, but this would require us manually running it. As such, last night I added HTML output support. I then enabled *battery* to run once a day and automatically update the HTML results. You can see the output [here](https://213.138.100.229/battery/).

There are a few important features in this report other than a list of all the accomplishment test results:

* It shows the failures: this provides a simple way for us to dive into the accomplishments and fix issues where they occur.

* It shows which tests, if any, are missing. This gives us a TODO lists for tests that we need to write.

While this was useful, it still required that we would remember to visit the web page to see the results. This could result in days passing without us noticing a failure.

Tonight I fixed this by adding email output support to *battery*. With it I can pass an email address as a command-line switch and *battery* will generate an email report of the test run. I also added *battery*’s default behavior to only generate an email when there are failures or tests are missing. This prevents it generating results that don’t need action.

With this feature I have set *battery* to send a daily “Weather Report” to the [Ubuntu Accomplishments mailing list](https://launchpad.net/~ubuntu-accomplishments-contributors); this means that whenever we see a weather report, something needs fixing. 🙂

One final, rather nice feature, that I also added was the ability to run *battery* on one specific accomplishment. This is useful for when we are reviewing contributions of new accomplishments; we ask every contributor to add one of these simple tests, and using *battery* we can test that the script works for validation success, validation failure, and script failure with a single command. This makes reviewing contributions much easier and faster and improves our test coverage.

## Graphing

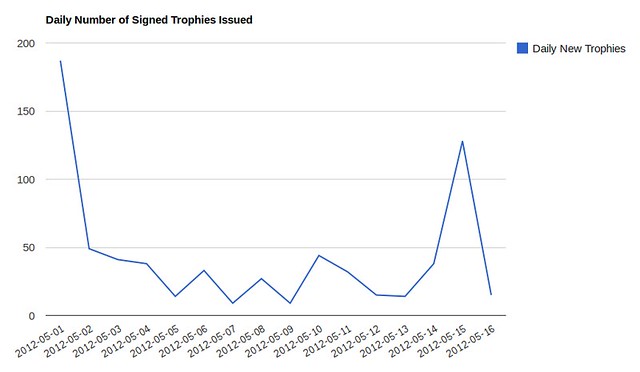

Something Mark Shuttleworth discussed at UDS was the idea of us building instrumentation into projects to help us identify ways in which we can make better decisions around how we build software. This is something I have also been thinking of for a while, and to kick the tyres on this I wanted to first track popularity and usage of Ubuntu Accomplishments before exploring other ways of learning how people contribute to communities to help us build a better community.

Just before we [released version 0.1](https://archivedblog.jonobacon.com/2012/05/01/first-ubuntu-accomplishments-release/) of Ubuntu Accomplishments, I created a little script that does a scan of the validation server to generate some statistics about the number of daily new users, the daily number of new trophies issued, and the totals. Importantly, I only count users and trophies, and I am only interest in publishing anonymized data, not exposing someone’s own activity.

To do this my script scans the data and generates a CSV file with the information I am interested in. I then used the rather awesome [Google Charts](https://developers.google.com/chart/) API to take my CSV and generate the Javascript need to display the graph. Here are some examples:

While this is not exactly instrumentation, it got me thinking about the kind of data that could be interesting to explore. As an example, we could arguably explore which types of contributions in our community are of most interest in our users, how effective our documentation and resources are, which processes are working better than others, and also some client side instrumentation that explores how people use Ubuntu Accomplishments and how they find it rewarding and empowering.

Importantly, none of this instrumentation will happen without anyone’s consent; privacy always has to be key, but I think the idea of exploring patterns and interesting views of data could be a fantastic means of building better software and communities.